YouTube has said that curbing misinformation is critical for platforms and society as tech evolves and AI-powered tools come in and asserted that the video streaming platform will act swiftly against technically manipulated content that aims to mislead users and cause real-world harm.

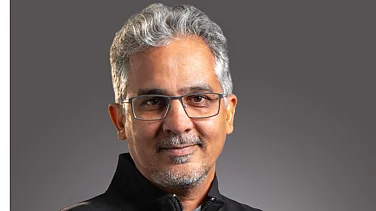

"We have well-established community guidelines in place that determine what kind of content is allowed on the platform", Ishan John Chatterjee, director, India, YouTube, said at a media briefing. Content moderation is a critical challenge for the platform and also for society as a whole as tech evolves, he said to a question on YouTube's content moderation approach in the backdrop of AI moving to the point where humans on screen are being replaced by AI avatars and powerful new age tools. As Announced by Sundar Pichai earlier this month, the platform is working on metadata and watermarks for contents generated by AI, he said.

Advertisement

"One of the policies besides different things like violence and graphic content is about misinformation and content that is technically manipulated to mislead users and cause real-world harm. That is not allowed on our platform and we will act against it. The company is committed to enforcing its policies consistently globally, and especially in India," according to Chatterjee.

"We have made significant investments and advances in doing it. But we know that our work here is never done, so we'll continuously keep investing in this area. The platform will continue to expand creative tools, ways to monetize, and help creators engage with audiences in meaningful ways, at the same time giving creators and viewers a safe experience to create and connect. We will continue to be laser-focused on making YouTube the best platform for long-term success," Chatterjee added.

Just one email a week

Just one email a week